Information, Expression, and Immersion Symposium

A two-day symposium hosted by Virginia Tech’s Creativity + Innovation (C+I) Immersive Audio Group aimed at discussing creative research, overall challenges, and requirements for presenting auditory information within immersive environments to facilitate the artistic expression of naturalistic listening experience for high-fidelity immersion. Thematic areas of interest included, and were not limited to:

- 3D audio

- spatialized music

- auditory displays in AR/VR/MR

- audio ambience (multiple sounds)

- auditory localization

- acoustic situation awareness

- bone conduction communication

- elevation illusion, sonification

Day 1 (September 15)

Time |

Event |

Location |

|---|---|---|

3:30~5:00PM |

Campus Tour (departing from Learning Studio at 3:35PM) |

Various Campus Locations |

5:00~6:00PM |

Opening Reception & Demo/Poster |

Learning Studio |

6:00~7:00PM |

Concert |

The Cube |

Day 2 (September 16)

Time |

Event |

Location |

|---|---|---|

9:00~9:30AM |

Welcome |

Perform Studio |

9:30~10:30AM |

Keynote 1 - Tomasz Letowski |

Perform Studio |

10:30~11:00AM |

Coffee break |

Perform Lobby |

11:00~12:00PM |

VT faculty lightning talks:

|

Perform Studio |

12:00~1:00PM |

Lunch Break (provided) |

Balcony Lobby |

1:00~2:00PM |

Keynote 2 - Dennis J. Folds |

Perform Studio |

2:00~2:45PM |

VT faculty lightning talks:

|

Perform Studio |

2:45~3:15PM |

Coffee Break |

Perform Lobby |

3:15~4:15PM |

Keynote 3 - John G. Casali |

Perform Studio |

4:15~5:00PM |

Round-table Discussion Moderator: Rafael Patrick |

Perform Studio |

5:00~5:15PM |

Wrap-up/Closing |

Perform Studio |

Faculty talks were presented on Monday in Perform Studio, Moss Arts Center.

Talks were presented from 11:00AM~12:00PM and 2:00~2:45PM.

Session I: 11:00~12:00PM

Doug Bowman, Ph. D

The Role of Immersive Audio in Serious VR Applications

Myounghoon Jeon, Ph.D

From Kitchen to Aquarium: The Spectrum of Sonification across Information, Expression, Immersion

Eric Lyon, Ph.D

The Art of Immersive Sound - Places and Possibilities

Rafael N.C. Patrick, Ph.D

Bone Conduction Communication: The other auditory sense

Session II: 2:00~2:45PM

Ivica Ico Bukvic, Ph.D

Establishing Foundations for the Immersive Exocentric Data Sonification using the Cube and D4 Library

Laura Iancu, MFA

Poetry on Horsemint Island

Sang Won Lee, Ph.D

Crowd in C - Crowdsourced Music Performance Through Social Interactivity

Traces, Ivica Ico Bukvic

"Traces" is an interactive work for the Locus glove-based interaction system designed specifically for the Cube to study the limits of human spatial aural perception capacity as part of National Science Foundation-sponsored research, the Spatial Audio Data Immersive Experience (SADIE), as well as the expressive potential of spatial sound as the primary dimension for driving the musical structure. "Traces" is in part inspired by SADIE’s study of the sun’s influence on Earth’s atmospheric data.

The Cascades, Eric Lyon

"The Cascades" presents tetrahedral field recordings of water sounds at various locations along the trail of the Cascades in the Jefferson National Forest. The original quadraphonic recordings are staged to induce various kinds of spatial perception, using unique spatial configurations afforded by the 3D speaker array in the Cube. The multi-story High Density Loudspeaker Array (HDLA) of the Cube is leveraged to manipulate elevation cues. The 64-channel ring of the first catwalk is employed to localize sound without the use of phantom images, allowing for unusual clarity of the spatial image in some passages.

Bunga Bunga, Tanner Upthegrove

"Bunga Bunga" is designed for live encoding and decoding with high order ambisonics (HOA). Up to 128 tracks from the audio workstation Reaper transmit individual audio channels and control data for each source in 3-D space into a sound spatialization engine in Max/MSP. Typically, I use ambisonic equivalent panning (AEP) to pan individual sound sources in a virtual 3-D volume, which can scale to other 3-D sound arrays. In the Cube, I use at least 13th order ambisonics with a modifiable center zone size. This method paired with the 3-D convolution reverb process I designed simulates real room acoustics in real-time at a low computational cost. For this reproduction of Bunga Bunga, I captured a live performance in 7th order ambisonics using SN3D normalization format with ACN component ordering.

Crowd in C, Sang Won Lee

"Crowd in C" utilizes a distributed musical instrument implemented entirely on a web browser for an audience to easily participate in music making with their smartphones. This web-based instrument is designed to encourage the audience to play music together and to interact with other audience members. Each participant composes a short musical tune that will be a musical profile of themselves. The use of the musical profile is a metaphor for online dating websites and the network created by the instrument mimics such social interactions where a user writes a personal profile, scans other profiles, likes someone, and mingle with other online users. A live coder can actively progress the music and orchestrate the crowd by changing the instrument on the fly.

Caroline Flynn (Poster)

Pulling at the Heartstrings : Development of a Cellular Stethoscope

Woohun Joo (Poster)

Sonifyd: A Graphical Approach for Sound Synthesis and Synesthetic Visual Expression

Sangjin Ko (Poster)

Modeling the Effects of Auditory Display Takeover Requests on Drivers’ Behavior in Autonomous Vehicles

Seul Chan Lee (Poster)

Autonomous Driving with an Agent: Speech Style and Embodiment

Chihab Nadri (Poster/Demo)

Visual Art Sonification - NADS MiniSim Driving Simulator Demo (Demo)

Harsh Sanghavi (Poster)

Takeover Request Displays in Semi-Autonomous Vehicles

Disha Sardana (Poster)

Studies in Spatial Aural Perception: Establishing Foundations for Immersive Sonification

Disha Sardana, Woohun Joo (Poster)

Introducing Locus: a NIME for Immersive Exocentric Aural Environments

Aline R. S. S. de Souza (Poster/Demo)

Sounds and spatialization with CSound

Kyriakos Tsoukalas (Poster)

Soundscapes with L2OrkMotes

Rafael Patrick (Poster)

Auditory Conduction Equal-Loudness (ACE): A perceptual method of comparing relative frequency response

Weiqi Yuan (Demo)

Algorithmic Music Creation in Virtual Reality: How Virtual Programming Language and Music Facilitate Computational Thinking

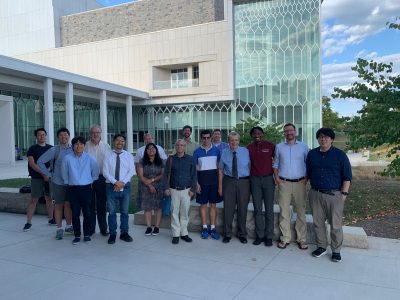

Chairs

Ivica Ico Bukvic, Ph.D

Rafael N.C. Patrick, Ph.D

Music Chair

Eric Lyon, Ph.D

Steering Committee

Myounghoon Jeon, Ph.D

Sang Won Lee, Ph.D

Eric Lyon, Ph.D

Tanner Upthegrove, MFA, CTS

Administrative Support

Meaghan Dee

Linda Hazelwood

Adriane Keller

Claire Pierce

Martha Sullivan

Donna Wertalik

Holly Williams

Melissa Wyers